With a Mid 90's Dodge, even if the Engine is not super healthy, in my experience they will still start very easily, requiring very little of the starting battery capacity. Newer, modern vehicles have much higher parasitic loads, and such a vehicle sitting unused for 3 weeks, can deplete the engine battery to the level where it might not have enough juice to start the engine. To extend the times these newer vehicles can sit unused and still start, they install larger capacity batteries, which inherently have more Cold cranking Amps, because of this. Much higher CCA ratings than are actually required to start the engine.

There are lots of complaints on regular automotive forums of extremely short battery lives on newer vehicles that sit unused often. Most of the population does not know that a lead acid battery needs to stay as close as possible to fully charged to have a respectable lifespan, and most all act like the alternator is a magical instant battery charger, So these starting batteries in rarely driven or very short trip driven vehicles are in fact being deep cycled, and then chronically undercharged and fail at 2 years or less. Since Automotive designers can't really eliminate these parasitic loads on these newer vehicles, the best compromise is to have larger capacity batteries installed so that the vehicle has a better chance of starting after being left at the airport for 3 weeks. Lots of newer vehicles also have much larger electrical requirements just to run the accessories. Larger batteries are needed not only to give some buffer for alternator failure, but account for lower alternator outputs when hot and idling at low rpms. However the starter motors on modern Vehicles largely use gear reduction and actual amp draw during engine cranking is still not a huge load. Look at all these tiny Lithium jump starters capable of only 200CCA or perhaps 400CCA which can easily crank and start engines over and over. They just do not require all that much to start.

However this is off topic, not really pertinent to the OP's question, only replying to Jim's claim that I am wrong. So I will not bother arguing anymore on this particular topic and I apologize to the OP and to Bob. Debating and Nitpicking small details is not helping the OP.

There are multiple ways to get the alternator to charge house batteries quickly and effectively (to 80% State of charge or so) and keep the engine battery isolated with the engine off. The simple continuous duty solenoid, rated for at least 100 amps continuous, that is triggered by the blower motor circuit is simple to wire up, and effective. On Dodges, the blower motor circuit is not live during engine cranking, so any delicate electronics hooked to the house batteries will not be damaged by surges when the starter is disengaged. There are many ways to trigger a solenoid to parallel the batteries.

There are many Different methods to parallel the batteries with engine running and separate them with engine off. There is no one right way. Each has its advantages and disadvantages. I choose a Manual 1/2/Both/off switch, but I must remember to turn the switch, and also be sure not to switch it to OFF with the engine running, or POOF go the diodes in the alternator.

When the engine is running, and the alternator is producing current and has battery voltage over 12.8 or so, there is NO amp flow between the batteries themselves. The blower motor circuit on Dodges is Live with the key turned to ON, but not when cranked to Start. So that short duration that the key is turned to ON, before engine cranking, the house battery will be drawing from the engine battery, but as soon as the engine starts the alternator will be feeding both sets of batteries, and the house batteries will take a majority of the current, if the engine battery was fully charged.

One can also put an illuminated manual switch inline on the blower motor solenoid trigger circuit and choose when to parallel the batteries at the flip of said switch, if one is worried about engine battery feeding the house batteries with the engine OFF, but key turned to on (not start). Also depleted batteries can ask for so much current that on a cold engine, they present a rather heavy load on the engine. Some sites clam that each 25 amps an alternator produces requires 1 engine HP. Some others claim this is much less, but I don;t think they are accounting for inefficiencies.

Often I will let my cold engine Idle with only the load of the Starting battery on the alternator( not including the juice required to run fuel pump and ignition) before turning my manual switch to 'BOTH' and my Ammeter which reads amps into the batteries, not total alternator amps, will go from about 7 amps right upto 64. The engine note changes as soon as the switch is flipped. If it is also wet out, and my battery is depleted, rpms above 1300 with cold alternator and depleted battery will cause my single V belt to start slipping and squealing as amperage approaches the 85 range, so often I choose to not feed the depleted battery until the moisture has been burned off and the belt has proper traction. This is with a Single group 31 12v battery rated at 130AH capacity. A pair of depleted GC batteries can draw even more amps when depleted, so this option of when to allow the alternator to feed the house batteries via an illuminated switch on the solenoid trigger, can be a very desirable option.

I'd recommend moving the interior lights, and the dashboard Ciggy plug receptacle and stereo to the house battery fuse block, so that these devices draw from house battery(s), and not the engine battery. I personally like to keep the Ignition/engine battery at full charge at all times, with ALL other loads on the house battery. Some choose other methods. There is more than one way to skin a cat.

Those considering AGM batteries as house batteries would do well to read this article by Mainesail.

http://forums.sbo.sailboatowners.com/showthread.php?t=124973

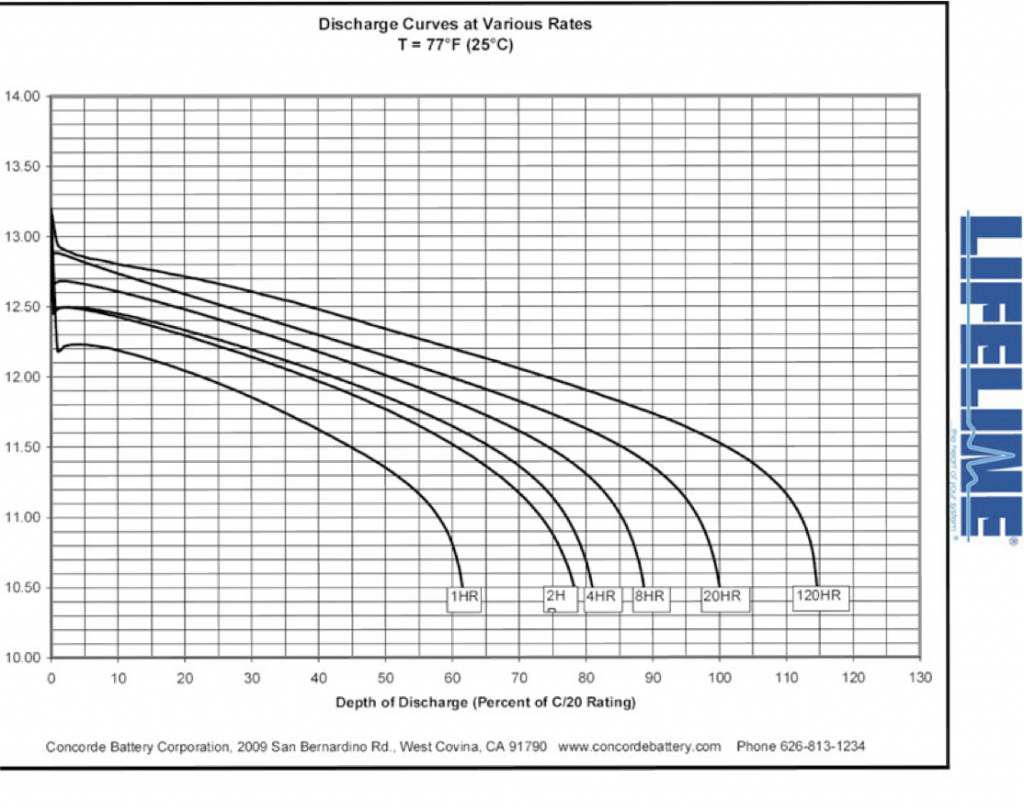

In short, he stresses that AGMs don't do well unless there is Solar/wind power to top them off, and that they have Minimum recommended charge rates, benefit from High recharge rates, and protest at slower recharge rates when deeply cycled.

As always it is better, cheaper and easier to use less electricity than to create gobs of it to replenish depleted batteries. I am guestimating the OP will be requiring 45 to 65 amp hours each night and will have 115 or so total to use so as to not use more than 50% of the battery capacity. If 65 amps hours are consumed then returning 45 of those can be done in an hour of driving, IF the charging circuit is thick, and the thicker the better, and engine rpms remain above ~800. Mine Idles hot at 525 rpm, not sure about a 94 with a 3.9 V6. A clamp on Ammeter here can be invaluable to see what the Alternator is actually capable of producing. Guessing is no fun.

I own this one:

http://www.amazon.com/Craftsman-Digital-400A-Clamp-On-Ammeter/dp/B003TXUZDM

It reads very closely with my Shunted meter.

The other 20 amp hours and the extra 10% or so required to return the batteries to a true full charge would take another 4 hours of driving, and as that is not going to happen in the OP's stated usage, the batteries will NOT be returned to full charge each day. If they were subjected to 2 weeks of cycling from 50 to 80% and back to 50%, they would only have about 80% of their original capacity to deliver. But the OP states that they will have 48 hours to plug in every 5 days.

This ability to not only give the batteries a break from being cycled, but also enough time to reach full charge via a grid powered charging source, is really Key to keeping them happy, and healthy enough so that they can be ridden hard the 5 other days. While they might not be brought down to 50% the first night, by night 5 they could very well be brought down below 50% if using the same amount each night but not returning to full charge each day.

There is a somewhat respected battery charger over on RV.net than can do 40 amps, will do 14.7 Absorption voltage, and has the manual option of forcing a 15.7v equalization cycle, which is a forced overcharge that restores a battery to its maximum remaining available capacity, and is important to do when heavily cycling flooded batteries. I have no personal experience with this charger but there are some battery nerds over there who respect its abilities, and it is a good match for a pair of 6v GolfCart batteries. However it is not capable of powering 12v loads whilst charging the batteries. It will likely sense the changing loads, suspect the problem and shut down.

http://www.amazon.com/Black-Decker-...bs_auto_1?ie=UTF8&refRID=00MP37VKANYCF5S16QFS

If the vehicle is to be lived in those 48 hours, then a RV converter is a better option. the Iota DLS-45 will do 14.8V, when the batteries are depleted and the cabling to batteries is short and thick. There are lots of reports that these converters either will not go into 'boost' mode and not apply their maximum amp rating when desired. Usually this is because of too long and too thin wiring between converter and batteries. With RV converters there are no Alligator clamps required, one must provide their own cabling, and RV manufacturers notoriously underwire wherever possible for their bottom line.

Also , 'Surface charge' is also responsible for many problems when living off battery power. When the alternator has been feeding the batteries with 20+ amps for an hour or 2, then the engine is shut off, even though the batteries are far from fully charged, a voltage reading will show they are up in the 13.4 volt range.

Far too many vandwellers see this 13.4 volts of the surface charge, and declare their batteries fully charged, or even more than fully charged, when in fact the batteries are far from fully charged. It can take 24 hours or more for the surface charge to dissipate.

Please read the following article, Also by Mainesail.

http://www.pbase.com/mainecruising/battery_state_of_charge

When a vehicle is recently driven, and then the vehicle is plugged into the grid, the Charging source, either an automatic battery charger, or an RV converter, will see this surface charge, assume the batteries are fully charged( when they are NOT), and then only apply enough amperage to hold float voltages, and in such cases it is possible that 48 hours of 13.6 volts will NOT fully charge the batteries. 2 months ago I took my group 31 to 50% state of charge, plugged into the grid, set my power supply to 13.6v, and 5 days later the specific gravity revealed it was NOT fully charged. They required 2 more hours at 14.9v before SG climbed to 1.280 or higher on all cells.

One must be smarter than "smart" charging sources. One needs to trick them by applying enough of a load to bring the battery voltage below 12.8v, and only then plug in the charging source. This tricks the smart charger into bringing the batteries upto the Mid 14 volt range, and the charging source will apply its maximum output until it nears this voltage range. If the cabling between charging source and battery is too thin, the charging source output terminals reach the mid 14's well before the batteries themselves reach that point, and charging is Not as fast as it could be, nor as effective as it could be, depending on the time plugged into the grid.

In short, any charging source should be wired short and thick to the battery bank. It is not just about the ampacity of the wire used, but making sure the battery voltage is as close as possible to the output terminal voltage of the charging source. Very very Few charging sources have separate voltage sense wires, which carry no load and thus suffer no voltage drop, that allows the charger to compensate for voltage drop on the circuit.

Any and all charging sources should be used whenever possible to keep lead acid batteries as close to fully charged as possible. Making sure those charging sources can do their job properly is achieved by short lengths of thick copper between charging source and battery. Overkill is possible, but unlikely with the price of copper, and wire ampacity charts that mislead people into thinking inadequate wiring, is adequate for the task of recharging batteries.

When batteries are cycled deeply, daily, and their ability to power the devices required for the time required is important to ones health, and not just inconvenient, then one needs to take measures to prevent bad thing from happening. The easiest method to make sure any charging source is doing all it can, is via large amounts of copper, properly terminated and fused, and the awareness that it is rather difficult and time consuming to actually fully charge a lead acid battery.

With the proper tools, such as a Hydrometer, and an Ammeter along with a voltmeter, one can gain a lot of experience with how their batteries are responding to their discharging loads, and how well the charging sources are doing to return what was used, along with about 10% more to account for inefficiencies.

Without these tools, one is blind, and while it works "just fine", for a while, at some point the batteries will not have enough capacity for the system to work "just fine". And then panic sets in, and then research, and then one might take the steps required to prevent prematurely killing the next set of batteries.

My intentions on this forum, are to prevent that premature killing of that 'learner' set of batteries and their replacements. I despise arguing, but not as much as I despise misleading information on a subject with which I am intimately familiar.

One not need strive for absolute perfection regarding the treatment of their batteries, they are of course only batteries, and only rented, but one should at least know what ideal perfection is, and make a line in the sand somewhere, and strive to reach that line rather than burying their heads in the sand well short of it, and demanding company there too.